No sooner do we reach a peak of youth and health than we start our degenerating journey towards the land of the aged. There are few things that one can discover about themselves than their own progress towards that inevitable of all ends. Time becomes precious, and the time to enjoy what we love even more so.

Intro

The weakening of hearing and sight are the most obvious of all the losses one experiences past the age of thirty or so. I know my ears will not be receiving the same frequency bands that they used to when I was eighteen[*], probably ever at any age after that. My love for music and the enjoyment of listening to voice and sound makes the logical conclusion obvious: enjoy music while you still can, in the best possible way.

The best audio equipment is of course costly. In high school, my friends and I would pore over magazines of hi-fi audio equipment (and cars, of course,) read specs, details of the latest speaker diaphragm and magnet technology and the latest MosFET amplifiers. But, beyond day-dreaming, we couldn’t afford any of it (with the exception of their pictures and and descriptions of their performance and quality, that is).

Since I left my parents’ home, where I had built an elaborate 2-amp, 8-speaker + Subwoofer system (the enclosure of the latter was designed and machined by yours truly,) to their and the neighbors’ dismay, I haven’t had an audio system beyond a pair of headphones attached to my computer or iPod (which I don’t even use for music, just audiobooks). Even so, I haven’t had a high-end headphones, mostly because I couldn’t justify the high cost.

As I realize that if I’m ever going to enjoy the music which I love to the fullest, it must be today rather than tomorrow. Hearing sensitivity can be lost without prior notice, and I had reason to think mine had already lost some of its vigor. I had already been tentatively shopping for a pair of headphones for a couple of years and I doubled my efforts to find a good set.

Candidates

The finalists for the headphones were: Grado PS500 ($600), HiFiMan He-400 ($500), Sennheiser HD 650 ($500). These are the retail prices, but that was my target range (more on the price below).

The three are very good by any measure. The most fun was the He-400. Compared to the other two, the He-400 had a fuller and punchy bass and a significantly brighter sound. Grado (as far as I can remember it, since it’s been a while since I heard it last,) had a special signature. The mids were very clear, but otherwise I found it a tad muddled. It felt a bit lacking, but I couldn’t tell what was amiss (overall clarity? Sound stage?). I heard it had a unique signature that took some time to get used to, but those who like it, love it. Both of these two, however, were not great when it came to comfort. Even while trying the Grado with the larger cups (those of the PS1000,) which compared to the stock cups are more comfortable by a mile, they were both clunky and fatiguing after a while; the Grado because the cups pressed over the ears; the He-400 because it is overly heavy.

I enjoyed the PS500 very much, but I felt it wanting. I could be fine with giving them a break after a couple of hours. But the He-400 was different. After a few tracks I felt its strain. The sound was full and punchy. Completely upbeat and fun. But I certainly couldn’t keep it on for more than half an hour. Not least because of the weight and the less than optimal ergonomics, but also because the bright and bassy sound would fatigue my hearing as well.

Enter Sennheiser. My current standard pair is the Sennheiser HD 280 Pro, a closed circumaural (over the ear,) cans that have a very decent sound. Comfort is great (if you don’t mind the tight fit and the almost-complete silence) and the sound is very pleasant (no fatigue) and still of good fidelity. Which is to say I’m familiar with Sennheiser and love their sound and ergonomics. I use them in the office for the closed nature, which both isolates my music from my colleagues and they from my music. I must add now that the mid-fi and hi-fi headphones are typically open with zero attenuation (for anything lower than 10Khz). This is for the best sound quality reproduction. But it also means that any noise next to you will ruin the enjoyment of what you’re listening to and similarly anyone next to you will hear what you’re listening (depending only on their distance from you). This can be extremely annoying, even on low volume, or when someone next to you is not nearly making too much noise. So they aren’t recommended for noisy environments, or where peers are in close proximity.

Sound Analysis

The HD 650 is even more comfortable than the HD 280, both ergonomically and in sound. It weighs half that of the He-400 and is far, far more comfortable. The sound signature is more natural than He-400, yet more clear and precise than the PS500 (at least to my ears). The bass is certainly gentler than the He-400, but fuller than PS500. I should think anyone but bass-heads (i.e. those who really love larger than life bass) would find it wanting. I fell into that category as a teenager. Now I want to enjoy a more balanced sound and feel the different styles that the artists choose for their music, rather than turning the bass to 11 on all tracks equally (I listen to classical, jazz, and classic- and progressive-rock as much as electronica and psychedelic). The HD 650s are considered dark because they have rolled-off highs, meaning they tend to have a little lower-than-normal treble, but I found them balanced and clear. They certainly come off as dark compared to the He-400, but only because the He-400 is too bright (those sensitive to high-frequency will find it fatiguing in long sessions, I certainly did).

On clarity, they are rather unforgiving. I can’t say the same of the PS500 as I remember it. The slightest imperfection in instrument, voice, or editing/mastering has now more chance to be noticed, thus spoiling the experience. This isn’t to say it’s the most analytical (i.e. clear and precise) headphone around—it’s not. But the more mainline your music selection, the more likely they’ll contain imperfections. This is similar (as far as analogies work,) to watching a VHS tape on an HD screen (try a 240p youtube video at full-screen). The color imperfections, blurriness and other issues old TV sets didn’t reveal are now obvious because there is more room to differentiate on an HD screen. But that seem almost inescapable with higher end gear.

I might have enjoyed jazz and vocal tracks more on the Grado, and electronic on He-400, but I enjoy more genres overall on the HD 650, not to mention longer sessions thanks to comfort. Especially enjoyable are rock and symphonic works with full-range dynamics, but electronic and jazz are also spot on (again, unless one likes very bassy electronica and higher mids for jazz). The bass, while weaker than He-400, is fast and firm (it doesn’t get muddled with a dull trailing woow,) but not plastic either. You can almost feel the beat of the drum and can easily notice the different styles of percussion that you hadn’t noticed before. Which brings me to soundstage (the quality of spatially discerning the position of instruments and vocals, to feel the sound coming from a band on stage and not someone sitting next to your ears).

The soundstage of the HD 650s is wide and clear. I didn’t find it difficult on high-quality recordings—and it’s important the recording and mastering to be top-notch here—to visualize where the different instruments were placed and how large the stage was. It gave a new feel to presence and a superior overall experience. From memory, it has wider soundstage than either the Grado or the HiFiMan.

Price

On price, He-400 has recently been replaced with the He-400i, which has a very different sound signature I’m told. The price has dropped to $300, while the newer model is now at $500. HD 650 has also seen price drops (esp. on Amazon) and I got it for $287 (the lowest price to-date). It can be had for ~$325 on a good day (at the moment it’s at $300, but it’s fast changing). The price is now back at ~$460. The HD 650 is said to not be very far in clarity and sound stage from its bigger siblings the HD 700 and the HD 800, which sell for $700 and $1500 respectively. So for under $300, the HD 650 is a steal. However this is in the US. On Amazon.ca they both sell for $550 CAD (~$460 USD) and on Amazon.co.uk for £250 (~$380 USD). The Grado is hard to find for less than $600, often for more. They go for nearly $1100 CAD on Amazon.ca! Possibly because it’s a smaller shop. On the other hand, Sennheiser has been declining the warranty, I read somewhere, when not sold from an authorized dealer (i.e. when you get them for a discount). So I’m not sure if I inadvertently forfeited my warranty of 2 years by buying from Amazon Inc. (directly, not a reseller on Amazon,) but at least one reported that Sennheiser did eventually honor the warranty upon his insistence.

DAC

Since I wasn’t going to throw $500 on a pair only to feed it with sub-par source (aka soundcard on motherboard) I was planning on getting a DAC (digital-to-analog converter that takes a USB input and gives analog audio output) and an Amp combo (a DAC needs an amplifier to drive the headphones, otherwise the signal wouldn’t be heard). This would cost me the least. But since I got the HD 650 for practically half the budget, that liberated some funds. There is very little room for improving DACs beyond a certain point, which is very easy to attain, so I got the cheapest Hi-Fi DAC on the market: Schiit Modi 2 (yes, that’s read as you think it should be read—they are a funny bunch those guys. They have another model called Fulla, as in Fulla Schiit, and another called Asgard!) That set me back $99 USD. For the price, it’s the only DAC that support 24-bit 192Khz sample rate that I know of. Practically, 96Khz is beyond sufficient anyway (most SACDs are at 88Khz and CDs/DVDs are at 44.1Khz and 48Khz respectively, although DVDs and SACDs support 192Khz) but I naturally wanted the best for my money.

Amp

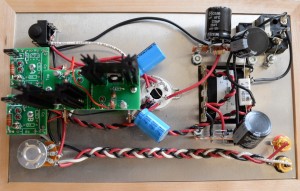

Now, for the Amp I went a bit, mmm, crazy. First, I skipped on 40-50 years of solid-state technology. Second, I went with a DIY kit, the Crack OTL Headphone Amplifier Kit (these guys aren’t with any less humor than Shiit, their larger kit is called S.E.X.). That set me back $279 USD, plus an upgrade called Speedball which retails for $125 USD, but was on special $20 when ordered with the amp (discounts and deals aren’t rare, just keep an eye on their website for offers). Building it should take a good weekend (possibly more, if your don’t rush it,) plus the Speedball on a separate weekend). As for the price, it’s the cheapest tube-amp with the highest praises on the market. I should add that this amp is one of the most recommended for the HD 650.

I’ve built the Crack first without the Speedball. This made it easier to test and troubleshoot. The sound was amazing by any standard I could think of. After a week I upgraded to the Speedball, but was disappointed to find out that due to counterfeit transistors (which were duly replaced by Bottlehead at no charge,) a part of the Speedball didn’t work.

After receiving the replacement transistors I finally built hooked up the complete Speedball and, unfortunately, yet again found a disappointment. Part of the Speedball circuit feeds the tube with a constant-current source. This, in theory, should make the background darker, that is, with lower floor noise. However, due to the design of this module, the Speedball also works as a high-frequency antenna. This is an issue when there are strong interference sources near the amp. Sources known to cause interference are CPUs and/or GPUs, and USB endpoints. As my music source is my workstation, I have all nearby. The interference is not audible on low to mid volume (without music source,) however on high it’s very clearly audible and annoying. For this reason, I had to disable the constant-current source of the Speedball. So I have a partial Speeball upgrade.

Results

The sound is phenomenal. I still find myself taken aback on some tracks that I never heard the way I do now. While this is a 300 Ohm can, it doesn’t take much power to drive them. Even on ipod they can be enjoyable, albeit on max volume. With a decent amp, like the Crack, the HD 650 sings. Comfort wise, I’ve had them on for 4-5 hours at a time (I know, I shouldn’t be sitting that long, but fun they are,) without either neck nor hearing fatigue, which for me count the most.

Even with a weaker bass than others, I’ve had goosebumps listening to a couple of tracks thanks to the deep bass. Angel, by Sarah Mclachlan, has a fantastically rich bass piano notes and there is little distraction to enjoy the vocal, which make the HD 650 truly shine (of course one should listen to lossless source, link is for reference only). Another track, from a very different genre, is Need To Feel Loved by Reflekt (Adam K & Soha Vocal Mix). This is a melodic electronic track with clear vocals. The bass takes me aback every time, and the vocals are clear even on high volume, which is hard to resist.

Bottom-line

Even though the Speedball didn’t workout for me in its complete form, I’m more than impressed and satisfied with the results. The cost for the setup isn’t trivial, in fact I’m still shocked at the amount of hard-earned currency I threw at this setup. But the investment should be good for a long time, and the reward even more so. The cables on the headphone are detachable and can be replaced. So does the velour earpads and the headband. I can also upgrade or replace any part without affecting the other.

It has been two months with this system and I have a hard time listening to anything else. Once used to music that sings, anything else is unacceptable. I wish I had built this years ago.

[*] Apparently, the process of cell aging starts as early as late teens. Until about 18, cells are in growth mode, which comes to an end and the (as yet) irreversible aging process starts. Cells from then on have a finite number of divisions that they can make before the inaccuracies in each division simply make it impossible for it to retain its form or function to divide further, so it dies irreplaceably. This is called cellular senescence, which happens at roughly 50 cell divisions. More on Wikipedia.